Introduction to blocks, files and objects

Data is now everywhere. Astonishingly, in the last two years alone humans created 90% of the world’s data. That explosive exponential growth has propelled the storage world. What once was a simple part of the IT landscape is now a vibrant zoo of different solutions, technologies and terminologies, all promising storage via different means. In this article we will highlight three types of storage systems, how they differ and what their strength’s are.

Welcome to the world of block, file and object storage.

First there were blocks

Imagine going to the store and buying a shiny new SSD drive. Interestingly, by default you cannot store files on it without a little assistance – the device has no notion of what a “file” is. Instead, all it understands are “blocks”. These atomic storage primitives allow the operating system to come up with its own decision on how to store the data. For most of us, we don’t deal with block storage directly, and instead we install a file system on top of it so we can store our information in the typical “file” and “folder” situation.

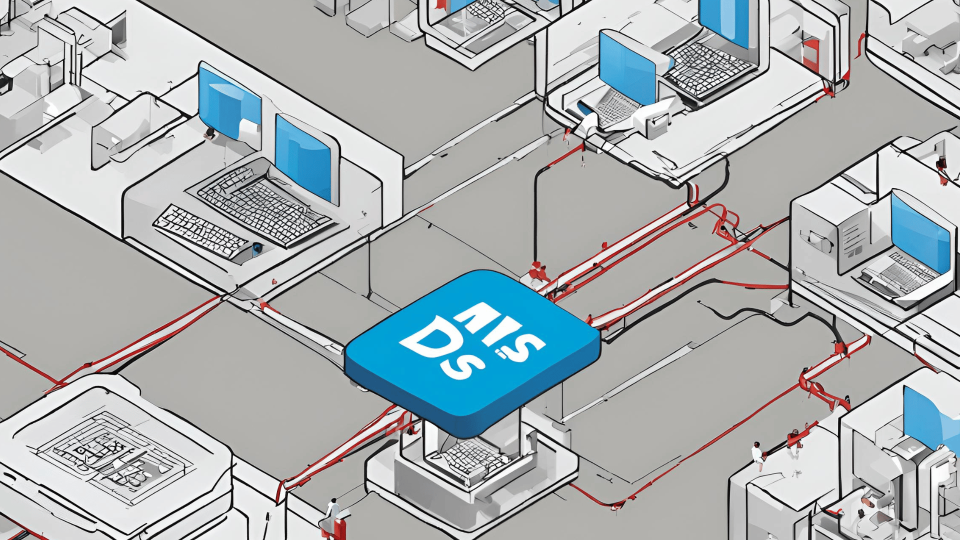

Block storage is what operating systems are installed on top of, as they need direct access to the disk with no abstractions in the way, so it’s no surprise that this type of storage medium is critical to servers. Whether they’re Linux or Windows-based, they are all installed directly on block storage. In the scenario above, this is a raw disk that is directly attached to the computer, but in an enterprise or cloud environment typically block storage is deployed in a SAN-architecture. This is a network of servers that present their disks over the network for servers or virtual machines to consume directly. Block storage is lightning fast, as it really has no strings attached; you can write data whenever and however you like. At Vokke, we use the “Elastic Block Storage” offering on AWS to power our virtual machines, and on-premises we use software-defined storage via Ceph to “carve up” physical disks into many “virtual block devices” for our virtual machines to consume.

And then we had files

File storage is what most people are comfortable with. Indeed, most people are familiar with the concept of a “file” and a “folder”. The data you want to save is placed on the storage medium, and after being given a name and a location within a directory, job done. File storage is common on every home PC (think of your C:\ drive) as well as commonly used on USB and CD-ROMs (writing that made me realize how old I’m getting…)

File storage has evolved to become quite a complex beast. Many file systems support permissions, such as the ability to grant specific users or user groups read or write privileges. Others support atomic locks, which are crucial for systems like databases to confidently write data to disk and know that the data won’t be overridden.

The specific way the files are written to the underlying storage medium is controlled by a so-called “file system”. If you’re running Windows right now, your files are almost certainly written using the NTFS file system. If you’re running Linux you have a bit more choice, and might be using EXT4, Btrfs, or ZFS. At Vokke, our Windows servers run on the NTFS file system, and we run our Linux servers on EXT4, ZFS and a distributed file system called Bluestore.

File systems excel because they provide specific guarantees about the data while abstracting away the underlying medium (you can move the same file from a hard drive to a USB with no issue) and neatly organize data into a system both humans and machines can understand and manipulate. Unfortunately, they hit a limitation when the number of files becomes large; they are notoriously hard to scale out (without losing some of their guarantees) and the once-beneficial features such as locking support and permissions can start to bog the system down. There’s a reason most of us have never seen a folder with more than a million files in it – it just doesn’t work that well.

And now we have objects, too

So who comes to the rescue when our file system is no longer performing up to par? What do we do? As with most things in storage, you rarely get to have your cake and eat it too, and here is no exception. If you remove the idea of folders, atomic file locking, and various other low level (but incredibly useful) functionalities, you end up with object storage: a method of storing data (each discrete unit stored is called an object) that is flat and non-hierarchical structure. Sure, you lose quite a bit of functionality, but what you get is truly infinite scalability. And the scalability is real – Amazon’s object storage solution called “S3” had more than two trillion objects stored globally by the end of 2013.

Because the fundamental primitive has changed, objects are not meant to be accessed through a file system; e.g. accessing it as another drive letter on Windows is not really the intended use case. Instead, you access objects typically via HTTP, either as an API call via REST or directly via the browser. Either way, the interface to the objects is at a higher level. This means you can’t run traditional applications on top of an object storage layer. Instead, you use it to scale out storage of files.

And there you have it, the 3 main attractions in the world of storage.